Model objects

After your model is trained, you want to be able to make use of it later for validation or production scoring. In some situations, like when your end goal is to produce a static report, this may not be necessary, but even in these cases it may be useful to reproduce the scores later.

A model object is an R object that is able to reproduce the scores

generating during training on the same messy, production data that was used

to train the model. Typically, R model objects like lm

or gbm objects are insufficient for reproducing

scores in a live scoring environment: you will have to "productionize" your

feature engineering pipeline as well after the data scientists have prototyped it.

In the Syberia modeling engine, feature engineering is a critical production-ready aspect of the process that does not require any additional code: the code you ran in mungebits and the parameters that were selected during training (for example, the columns that were dropped after detecting for correlations, the means of features to use during imputation, etc.) are stored on the model object.

This allows us to faithfully replicate the end-to-end data science process that produced the model on single rows that come through in a production system. Alternatively, we can use the model object to generate validation scores on new samples from the original distribution, e.g., when new customers arrive or when we get additional data.

The tundra container

The default model object container that ships with the modeling engine is a tundra container (tundra because it freezes your model for production use, and tundra is in Syberia... get it?).

A tundra container is an R6 object that consists of a munge procedure, a train function, a predict function and inputs saved during training. The munge procedure is copied directly from the data stage.

# Place this code in models/dev/example3.R

list(

import = list(R = "iris"),

data = list(

"Create a dependent variable" = list(renamer ~ NULL, c("Sepal.Length" = "dep_var")),

"Create a primary key variable" = list(multi_column_transformation(seq_along) ~ NULL, "dep_var", "id"),

"Drop categorical features" = list(drop_variables, is.factor)

),

model = list("lm", .id_var = "id"),

export = list(R = "model")

)

Note that we have added ~ NULL. This is very important to ensure

the model predicts correctly: we do not want to rename the dependent variable during

prediction nor do we want to create an id column. Now let's execute the model.

run("example3")

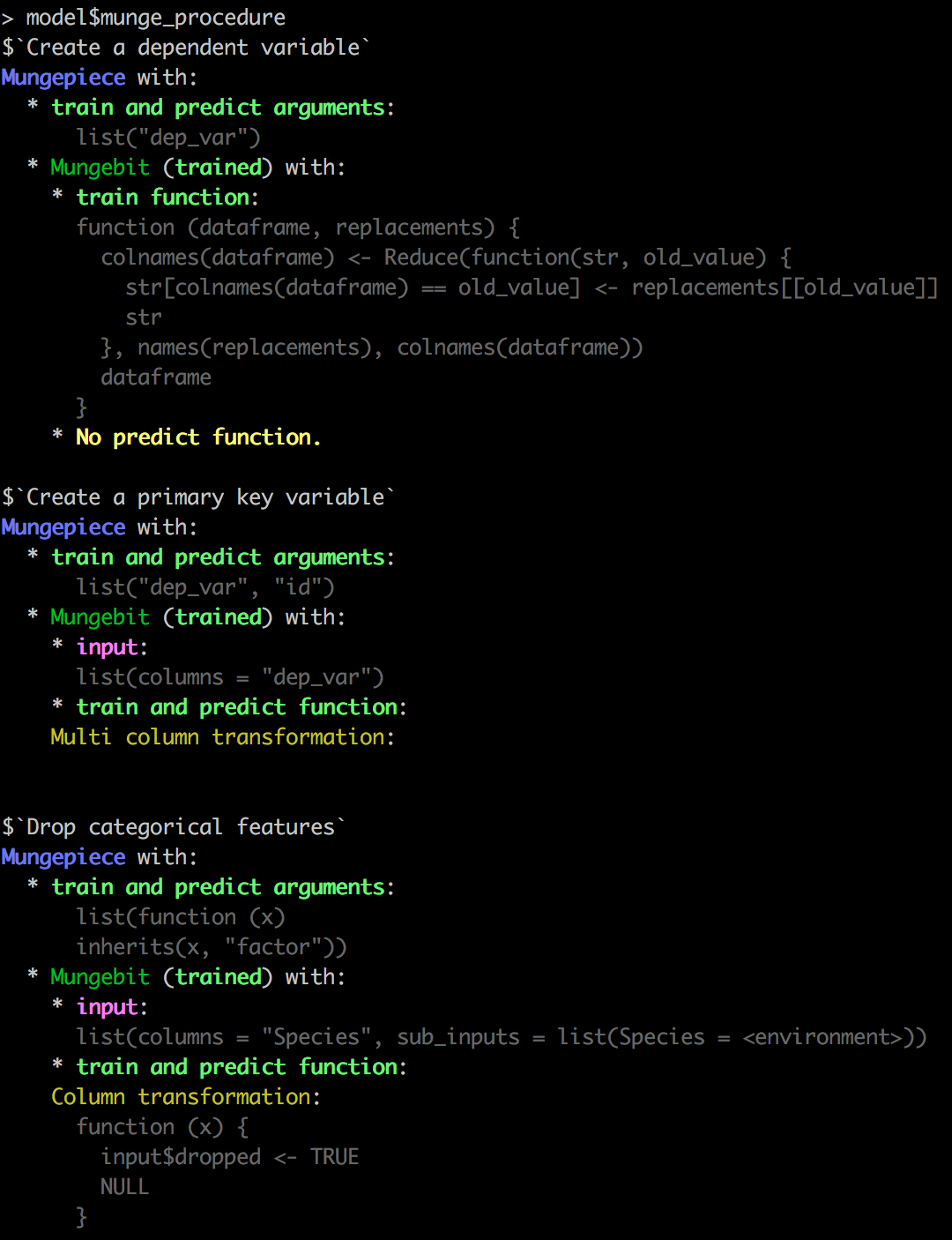

model$munge_procedure

model$predict_fn

You should see some colorful output!

Let's try to make a prediction: model$predict(iris[1:5, ]). You can

also inspect how the model is munging the data using model$munge(iris),

or even execute just a subset of the munge procedure using model$munge(iris, 1),

model$munge(iris, 1:2), model$munge(model$munge(iris, 1), 2), etc. This can be very helpful for debugging.

Serialization of model objects

R is a LISP which means that most R objects, including model objects, are recursively

composed of lists and atomic vectors. This makes serialization very easy in R! In fact,

the built-in saveRDS is capable of serializing most objects.

(If you have custom C pointers or difficult-to-serialize environment objects you can

define a custom serializer — see the appendix).

To save the model object to a file, simply use saveRDS:

dir.create("~/tmp")

saveRDS(model, "~/tmp/model.rds")

readRDS("~/tmp/model.rds")$predict(iris[1:5, ])

You will notice the predictions are the same as before.

Constructing model objects

Let's create a model object directly.

model <- tundra::tundraContainer$new("lm", function(data) {

output$model <- stats::lm(Sepal.Length ~ Sepal.Width, data = data)

}, function(data) {

predict(output$model, data = data)

})

model$train(iris)

model$predict(iris[1, ])

Let us first note that this is a terrible model specification. The training function directly encodes `Sepal.Length ~ Sepal.Width` rather than parametrizing the dependent and independent variables. Under the hood, all examples of classifiers in the modeling engine build tundra container objects.

The general format for a model container is

model <- tundra::tundraContainer$new(

keyword = "lm", # Keyword describing the model

train_function = identity, # training function

predict_function = identity, # prediction function

munge_procedure = list(), # A list of mungepieces to apply before

# training / prediction.

default_args = list(), # Default training arguments

internal = list() # A list for specifying additional attributes, e.g.,

# domain-specific metadata for the model.

)

Note the last three arguments. By default, tundra containers perform no feature engineering on the data given to the train or predict function.

However, it is possible to specify a list of mungepieces that will effectively make the model object capable of handling arbitrarily messy production or validation data during prediction: the munge procedure simply gets executed using the predict versions of the mungepieces prior to running the predict function.

Help from the modeling engine

The modeling engine abstracts away the creation of a tundra container by following the convention below.

- Classifiers, meaning pairs of train and predict functions on the

training data, are specified in the

lib/classifiersdirectory by defining atrainandpredictlocal variable representing the training and prediction function, respectively. - Classifiers are parametrized by a list which is given to the second argument of the training function. The value given by the "model" key in the model file is a list specifying the parameters to be passed in to the training function.

With these two abstractions in hand, we can cleanly create portable model containers that can be used for running validation experiments on future distributions of the data or even live in production systems.

Next Steps

Appendix: Model deflation

R's easy serialization capability seems like a free lunch but there is a dangerous trade-off. If you use environments or functions (which implicitly have an environment attached) anywhere in your model objects it is possible to capture far more than you intended upon serialization.

For example, instead of capturing just the model objects, you might capture most of the data in the global environment and store gigabytes of unnecessary serialized R objects! This is not a problem in languages like Python or Java because every object must have its own serialization (pickling) code.

To relieve this issue, the modeling engine comes with some simple deflation triggers that prune any closures (functions) on the model objects from carrying too much information. Because R is such a dynamic language, there is in general no way of identifying what will be needed by a given function, but we try our best: we assume the train and predict functions of a classifier are pure.

Pure functions do not make use of global variables or variables in the parent environment of the function. This rules out helper functions but increases stability and serializability. Simply put your helpers in the body of the train or predict functions.

If you find your model objects getting large unexpectedly, carefully examine

your code and what you are storing in input, or modify

the model stage accordingly.

Appendix: Custom Serializers

A lot of R models serialize out-of-the-box, but some models (like xgboost) represent their underlying state in a C structure which is not natively serializable by R.

For such models, you may have to define additional serialization procedures. TODO: Explain how.